ChatGPT should never be your doctor. But it can be your doctor’s assistant.

ChatGPT should never be your doctor. But it can be your doctor’s assistant.

Back to blog

As an executive running large clinical organizations, I saw doctors doing countless, low-value administrative tasks that drained their joy for the profession: helping to reschedule a visit, getting a cost estimate, or calling a specialist’s office to land an urgent referral visit.

There is no rationale for having simple administrative tasks owned by someone with decades of specialized experience and extensive training. It becomes downright irresponsible when, as is the case today, these tasks are proven to exacerbate burnout contributing to our country’s clinical staffing shortage.

So, I say, bring ChatGPT on. Let it complete administrative tasks for our doctors and clinical staff, allowing them to refocus their energy on patient care: the reason they got into medicine in the first place. But, let’s be careful not to confuse administrative tasks with those requiring clinical insight and nuance. ChatGPT, despite recent upgrades that have allowed it to pass U.S. medical examinations, shouldn’t be your doctor — it doesn’t know you and lacks the nuanced decision-making skills required to autonomously guide your care.

Many of us already know this intuitively from our own personal experience. Think back to the last time you got your lab results from the doctor: did you understand what the results meant for you? If not, what did you do to try to understand them?

You Googled it. Just like I had, countless times. But, because Google doesn’t know me or my medical history, search results are intentionally vague and comprehensive. This, in turn, leads patients to diagnose themselves with irrelevant health conditions and panic unnecessarily. ChatGPT-esque tools, which will soon be incorporated into online search platforms, are no different. In fact, ChatGPT’s ability to create natural language responses gives patients the false impression that it has clinical expertise. ChatGPT-powered searches by patients could provide clinically inaccurate or misleading information fueling panic, which would only amplify burnout as doctors see an increase in unnecessary panicked patient outreach. Even before LLMs went mainstream, my team presented research on the statistically significant association between clinical burnout and patient ‘googling’ at the 2022 Society of General Internal Medicine conference.

This isn’t to say that ChatGPT-esque tools don’t have an important role to play in healthcare, but rather that we need to take a collective breath to consider how best to implement new technologies to solve real problems.

When considering what tasks should or should not be replaced by ChatGPT, I’ve found it helpful to lean on a framework to evaluate potential use cases. I like Jake Saper’s framework where use cases consider workflow embeddedness and accuracy. As entertaining as it is to have a simulated chat with a Nobel Laureate, for example, neither accuracy nor outcome matters. Drafting legal contracts, on the other hand, has real-world consequences, with both accuracy and outcome being highly important. Today, use cases for LLMs that find themselves in the accuracy and outcome quadrant warrant additional scrutiny and human oversight.

Using this framework, there are several areas where ChatGPT-esque tools could provide immediate value in healthcare with minimal downside risk. Scheduling and dictation are two places where ChatGPT, with minimal adaptation, could plug in to save doctors and clinical staff valuable time. Real-time translation is another application of ChatGPT, especially when the alternative is an overreliance on Google Translate or family members for the communication of medical information.

However, going back to our workflow and accuracy framework, there’s no evidence we are justified in allowing generalized large language models (LLMs) like ChatGPT unrestricted access to autonomously answer a patient’s questions about their health. While ChatGPT can regurgitate the medical textbook definition for any given query and even deploy empathy more effectively than doctors (in some cases), it cannot personalize its advice nor offer a definitive diagnosis or course of treatment. A clinician needs to be in the loop to avoid the reverberating effects that an unpredictable and ‘hallucinating’ chatbot response can create. In the case of interpreting health data, implemented incorrectly, ChatGPT could fuel an increase in patient panic and create additional unnecessary work for doctors, worsening the very challenges that it was deployed to solve. For the variety of clinical tasks that doctors perform every day where maintaining accuracy and considering nuanced context is exceptionally important, AI should be implemented only as a co-pilot.

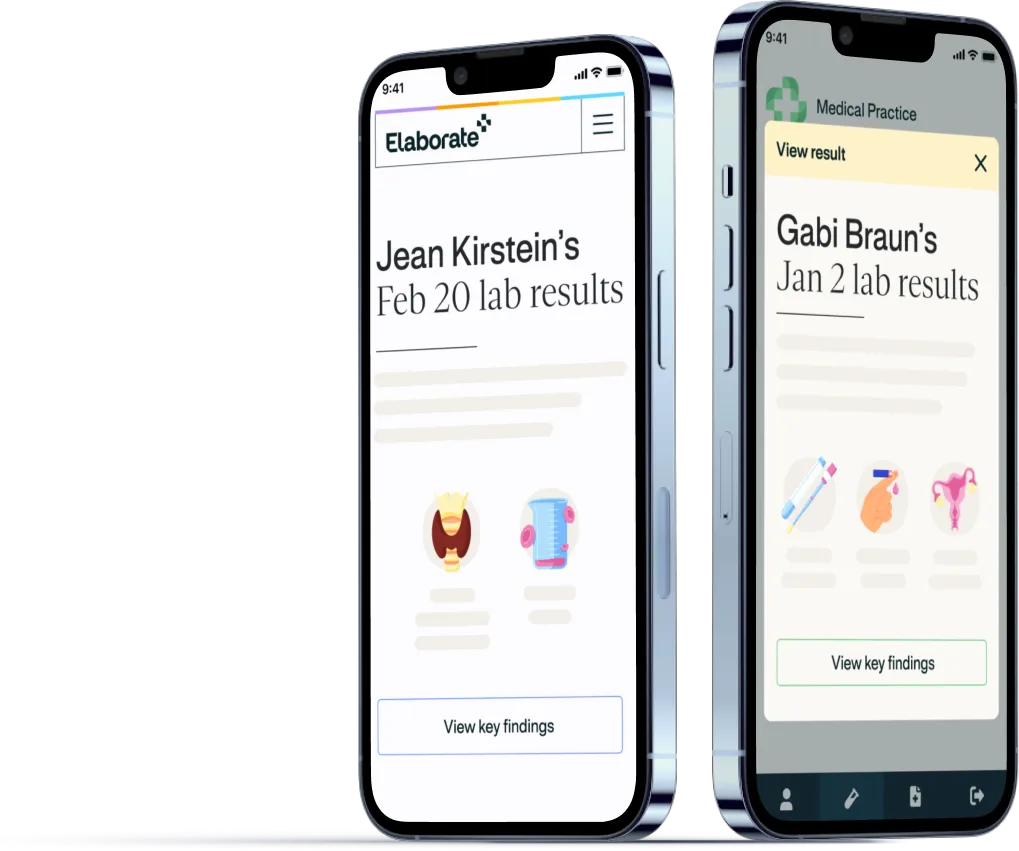

At Elaborate, we’ve developed our technology in partnership with clinicians to solve patient messaging challenges (‘inbasket’) by automating personalized context to accompany the direct release of a patient’s health data. Unlike LLMs, our technical approach has been painstakingly crafted for this specific use case and puts the clinician in the driver seat. The tool is implemented directly in the electronic medical records (EMR) system and offers doctors total control with transparent, repeatable, and easily editable summaries. Most importantly, unlike ChatGPT-driven pilots requiring heavy editing by doctors, over 90% of our results are deemed patient-ready by doctors without any intervention.

Recently, when chatting with the leaders of one of this country’s largest health systems, they remarked that efforts to innovate often failed because solutions were adopted without designing around an existing problem. If an AI tool is implemented, but requires heavy intervention from the doctor, it begs the question — is it the right tool to solve the problem?

Today’s ChatGPT-esque tools can act as a powerful tool to automate administrative tasks, but we should be skeptical of handing over control for nuanced tasks without understanding the risks and weaknesses of the powerful tools at hand. As we witness this wave of innovation in healthcare, I am eager to see solutions right-sized to problems — not the other way around.

Keep tabs on Elaborate.

Keep tabs

on Elaborate.

Sign up to be notified of the latest updates.

.png)